A CEO’s Guide to Building a Data Warehouse

- GrowthBI

- Jul 17, 2025

- 7 min read

Updated: Aug 12, 2025

I have seen time and again in mid-size companies how organizations become disconnected. Finance is buried in its ERP, the sales team lives inside its CRM, and the operations crew relies on its own logistics software. This fragmented data environment creates serious costs that eat directly into your profits.

Your teams likely lose hours every week just trying to make sense of it all. They export data to spreadsheets, try to stitch conflicting information together, and debate whose numbers are correct. This activity wastes time and creates a massive drag on your operations.

Decisions get delayed or are based on information that is incorrect. You are left to speculate on critical questions like:

What is our actual cost per unit when we factor in all fluctuating logistics and material costs?

Which of our product lines are truly the most profitable after accounting for returns and support tickets?

Are our production schedules in sync with our real-time sales forecasts?

The fallout is real and measurable. I have seen a single delayed production run, caused by bad inventory data, cost a company thousands in lost revenue and damage the trust they had with their client. Over time, these small issues snowball into major roadblocks.

When different departments look at different data, it is almost impossible for them to pull in the same direction. Without a shared reality, your teams cannot work toward common goals.

Ultimately, a poor data setup forces you to be reactive. You are constantly putting out fires instead of planning for the future. You miss chances to optimize your pricing, spot cross-sell opportunities, or get ahead of supply chain disruptions.

This is why building a data warehouse is fundamental. It is fixing the core problem by creating one reliable source of information for everyone in the organisation.

Defining Your Business Case

Building a data warehouse is a strategic project first and a technical one second. True success starts when you translate your everyday operational headaches into measurable business goals.

To build a strong business case that gets everyone on board, your leadership team needs to answer some tough questions. The answers you come up with will be the bedrock of your data strategy and will justify the entire investment. We have put together a guide on creating a practical data strategy framework for mid-sized companies that can help structure this process.

Here are the kinds of questions you should be asking:

What critical decisions are we failing to make right now because our data is untrustworthy?

Which specific reports or dashboards would give us a real competitive advantage if we could trust them and get them on demand?

How would integrated data improve our financial planning and operational forecasting cycles?

Australian businesses are increasingly adopting data warehousing, mirroring a global shift driven by the need for solid analytics in industries from finance to manufacturing. In fact, the global data warehouse market is expected to surpass USD 30 billion by 2025. Australian companies are a big part of this, replacing outdated systems with cloud-based solutions to stay competitive. You can read more about the growth of the data warehousing market here.

Answering these questions forces a crucial shift in thinking. You stop talking about technical needs and start defining business outcomes. The goal is to “improve profitability” or “increase operational efficiency.” This clarity gets everyone, from the executive team down to the department heads, aligned and pulling in the same direction.

Making Key Architectural and Platform Choices

Once you have a solid business case, it is time to make some foundational technical decisions. For a non-technical leader, this part can feel daunting, but it comes down to a few core choices that have big implications for your project's cost, flexibility, and future maintenance.

You have two primary options for your data infrastructure:

On-premise data warehouses require you to purchase servers, hire IT staff, and manage everything internally. You own the hardware, control the software, and handle maintenance. This approach demands significant upfront capital investment plus ongoing costs for electricity, cooling, security, and specialized staff.

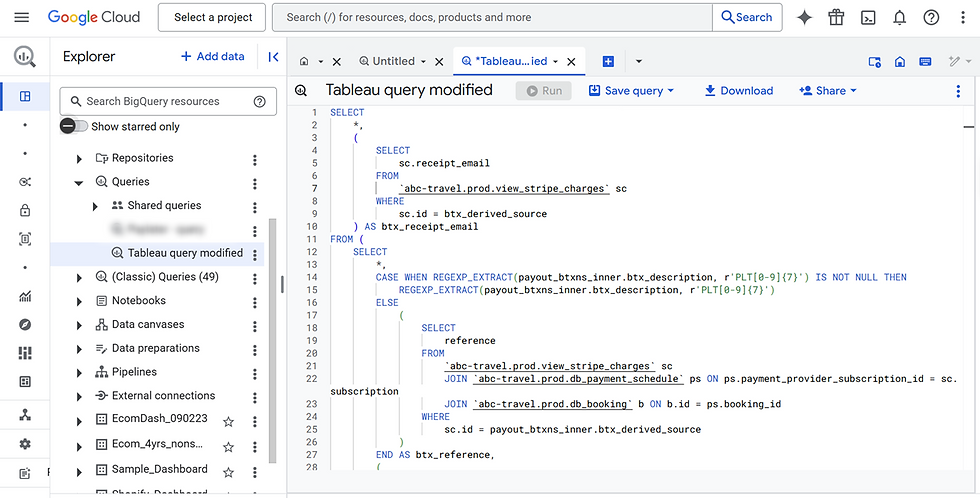

Cloud data warehouses operate on a pay-as-you-use model through platforms like Snowflake or Google BigQuery. The provider handles infrastructure management, security updates, and scaling. You simply upload your data and pay based on storage and processing power consumed.

The cloud approach offers three critical advantages: faster deployment (weeks instead of months), lower initial costs (no hardware purchases), and automatic scalability (systems grow with your business). For most mid-sized businesses, the cloud is a much faster and more cost-effective way to get going.

Data Warehouse Platform Comparison for Mid-Size Businesses

Choosing between a cloud and on-premise solution is a significant decision. The table below breaks down the key factors from a business perspective to help you weigh the pros and cons.

Factor | Cloud Data Warehouse (e.g., Snowflake, BigQuery) | Traditional On-Premise Warehouse |

Initial Cost | Low upfront costs; pay-as-you-go pricing model. | High upfront investment in hardware, software, and facilities. |

Scalability | Easily scale storage and compute power up or down on demand. | Scaling requires purchasing and installing new hardware; can be slow and expensive. |

Maintenance | The cloud provider manages all infrastructure, patches, and updates. | Your internal IT team is responsible for all maintenance and security. |

Time to Value | Very fast to set up and start using, often within hours or days. | Can take months to procure, install, and configure before it is usable. |

Team Skills | Requires skills in cloud platforms and modern data tools. | Requires traditional database administration (DBA) and server management skills. |

For most companies today, the agility and lower barrier to entry of a cloud data warehouse make it the clear winner. However, businesses with strict regulatory or data sovereignty requirements might still lean towards an on-premise solution for maximum control.

Choosing Your Data Pipeline Approach

Next, you need to decide how to get data from your various systems, like your CRM, accounting software, and marketing platforms, into your new warehouse. This is often called the data pipeline, and there are two main ways to tackle it: ETL and ELT.

ETL (Extract, Transform, Load): This is the traditional method. You pull data from the source, clean it up and structure it (transform), and then load the finished product into the warehouse. It works, but it demands a lot of planning upfront.

ELT (Extract, Load, Transform): This is the modern and more flexible approach that cloud warehouses have made possible. You pull the raw data out of the source system and load it straight into the warehouse (Extract, Load). The transformation happens later, using the warehouse's own powerful engine to shape the data for analysis whenever you need it.

Now, let's talk about getting started. The idea of building an all-encompassing data warehouse from scratch is a recipe for disaster. I have seen it happen time and again. Teams try to connect every single data source at once, a "big bang" approach that gets bogged down in analysis paralysis and ends up with nothing to show for months, sometimes years.

A phased implementation is a much smarter way to do this.

Instead of attempting to connect every data source simultaneously, you pick one high-impact business problem and solve it. That is your pilot project. The point is to get a quick win, prove the concept, and show tangible value to leadership. This builds momentum and gives your team a chance to learn and refine the process on a smaller, manageable scale.

Your First Pilot Project

Structure your pilot as a time-bound project with specific deliverables. You should aim to complete it within 90 to 120 days. This tight timeframe forces everyone to concentrate on what is absolutely essential, cutting out the noise and "nice-to-haves" for later. A successful pilot at this stage is your proof of concept, making it much easier to justify the investment for the next phase.

So, what does this look like in practice?

Pinpoint Critical Sources: Start small. Identify the two or three systems that hold the key to solving your chosen problem. For a new sales dashboard, that is almost always your CRM and your financial platform. Nothing else for now.

Sketch a Simple Data Model: This is a conversation with the business users. You sit down with them and map out how the data from those systems needs to link up to create the report they need.

Build Your First Pipelines: Now the technical work begins. You will set up the initial data flows to pull information from those few chosen sources and load it into your new cloud data warehouse.

Deliver the First Reports: Finally, you build the dashboard or report that directly answers the business pain point you identified at the start.

If there is one piece of advice I can give, it is this: involve your end-users from the absolute beginning. If the sales director and finance controller help you define the pilot, the final dashboard will be something they actually use. That early collaboration is the secret to getting the whole organisation on board.

Governing Your Data Warehouse for Long-Term Success

You have built your data warehouse. That is a massive achievement, but the work does not end at deployment. Treat your new data warehouse as an ongoing business system that requires continuous management. Without the right oversight, even the most brilliantly designed system can see its value degrade as business needs evolve and new data flows in.

To protect your investment and make sure it remains the company's single source of truth, you need a practical governance framework. Data governance establishes clear ownership and straightforward operational procedures that streamline decision-making.

Defining Data Ownership and Quality

First, you need to assign data ownership. The idea is simple: the department that creates and understands the data is the one responsible for its accuracy. Your finance team, for instance, should ‘own’ all financial data sitting in the warehouse.

This means they are responsible for a few key things:

Validating Accuracy: Routinely checking that the figures in the warehouse line up with the source systems.

Defining Business Rules: Establishing the official definitions for critical metrics like "revenue" or "customer acquisition cost."

Approving Changes: Giving the final sign-off on any new financial data sources or changes to existing ones.

When you assign responsibility like this, you build a culture of accountability. For a deeper dive, we have outlined some proven strategies to improve data quality that work well.

A great way to manage this is with a cross-functional governance committee. Even a small group with representatives from finance, sales, and operations can make a huge difference. They can meet quarterly to review data quality reports, prioritise new data requests, and make sure the data roadmap stays aligned with the overall business strategy.

Planning for Scalability and Future Growth

Your business is always changing, and your data warehouse needs to keep up. As you grow, you will want to pull in new data from marketing campaigns, logistics platforms, or other operational systems. A solid governance plan gives you a clear process for adding these new sources without breaking what is already there.

This kind of scalability is backed by significant infrastructure investment.

At GrowthBI, we specialise in building the data foundations that drive real business outcomes. We deliver the custom dashboards and data integrations leadership teams need to move from scattered spreadsheets to a single source of truth. If you are ready to make data-informed decisions, explore our services and reach us at https://www.growthbi.com.au.