How to Improve Data Quality: Proven Strategies for Mid-Sized Companies

- GrowthBI

- Jul 16, 2025

- 9 min read

Updated: Sep 12, 2025

Improving your data quality is about building a solid framework for how data is defined, managed, and monitored across your business. Treat it as a continuous business function that involves clear ownership, standardised information entry, and automated checks to catch errors before they affect your reports.

Australian firms face an average financial impact of A$493,000 annually due to data integrity issues, according to a recent IDM research. Furthermore, 48% of Australian organisations admitted to losing a competitive advantage because of poor data quality, a figure well above the 29% global average.

The same report from IDM found that 84% of Australian companies with solid information management directly link it to growth in revenue and profitability.

Poor data is a direct threat to your bottom line, operational plans, and competitive ability. When your leadership team cannot trust the numbers, every decision becomes a gamble. The problem often begins with minor inconsistencies. For instance:

In Sales: One person logs a prospect as "Warm," while another uses "Engaged" for the same level of interest. This skews sales pipeline forecasts and leads to wasted marketing expenditure based on bad information. A unified sales pipeline dashboard ensures everyone is working from the same definitions and data, eliminating confusion and enabling accurate forecasting across the team.

In Operations: A manufacturing team does not consistently enter product defect codes. This makes it impossible to find the root cause of quality issues, leading to wasted materials and production delays.

In Finance: Different team members categorize invoices under slightly different expense codes. This small difference complicates budget tracking and hinders accurate financial planning.

These individual errors compound over time. They create a ripple effect that undermines teamwork and slows the entire decision-making process.

The Financial and Competitive Impact

The consequences of poor data quality are concrete and measurable. Ultimately, the cost of a poor data setup is the cumulative damage from flawed decisions, missed opportunities, and constant operational friction. Fixing these foundational problems is a core business imperative, essential for growth in a competitive market. A clean data foundation provides the clarity needed to plan for the future with confidence.

When reports are built on unreliable data, leadership meetings shift from strategy discussions to debates about whose numbers are correct. That wasted time is a significant hidden cost that reduces agility and stalls progress.

Building a Reliable Data Foundation

Addressing poor data quality involves building a repeatable framework that prioritizes business outcomes. Think of it as creating a system of accountability and clear rules to prevent bad data from entering your systems.

You do not need a large budget to begin. The key is to make incremental improvements that deliver immediate value. This approach builds momentum. By focusing on the core pillars of ownership, standards, and validation, you lay the groundwork for trustworthy reports and more confident decisions.

Assigning Clear Data Ownership

First, you must treat your data like any other valuable company asset. This means someone must own it. When no one is clearly responsible for a dataset, its quality will inevitably decline.

For example, your Head of Sales should be accountable for the accuracy of your CRM data. Your Operations Manager should own the data from your inventory and production systems. With this clarity, when a dashboard shows conflicting numbers, everyone knows who needs to investigate and fix the problem.

Assigning ownership transforms data quality from a vague problem into a concrete responsibility. It empowers the people who understand the business context of the data to maintain its quality.

This creates a direct link between data quality and business performance. When the Sales Director's quarterly forecast relies on clean CRM data, they will have a vested interest in their team entering it correctly.

Creating and Enforcing Data Standards

Once ownership is established, the next step is to define what "good" data looks like. This means creating clear standards for how information is recorded across the business. Inconsistent definitions are a massive source of confusion and flawed analysis.

Consider a typical SaaS company. What exactly is a "qualified lead"?

Marketing might define it as anyone who downloaded a whitepaper.

Sales might argue it is only qualified after a discovery call.

This disconnect leads to inaccurate pipeline forecasts and friction between the teams. The solution is to have them agree on a shared definition. This business-led discussion results in a standard that is documented and implemented in both the CRM and marketing automation platforms. We have outlined how to connect data initiatives to business goals in our guide on a practical data strategy framework for mid-sized companies.

This standardization process should cover all critical data points, from customer types and product codes to financial transaction labels.

The infographic below shows a simple way to think about defining these quality standards.

It all begins with identifying the key dimensions of quality, like accuracy and completeness, and then setting measurable targets for each one.

To get a clearer picture of what these dimensions mean, this table breaks them down and shows the business impact of getting them wrong.

Key Data Quality Dimensions and Business Impact

Data Quality Dimension | Description | Business Impact Example (If Poor) |

Accuracy | Does the data correctly reflect the real-world object or event? | An incorrect customer address leads to failed deliveries and wasted shipping costs. |

Completeness | Is all the necessary data present? | Missing contact information in the CRM prevents the sales team from following up on leads. |

Consistency | Is the same data stored the same way across different systems? | The finance system lists a client as "ABC Corp," while the CRM has "ABC Corporation Pty Ltd," causing duplicate records and skewed reporting. |

Timeliness | Is the data available when it's needed? | Sales figures that are a week old are useless for making daily operational decisions. |

Uniqueness | Is this the only record for this entity? | Duplicate customer profiles lead to sending multiple marketing emails, annoying customers and inflating engagement metrics. |

Validity | Does the data conform to a defined format or rule? | A phone number field that accepts text ("Not available") breaks automated diallers and SMS campaigns. |

Understanding these dimensions helps you pinpoint where your data processes are failing and what the true cost to the business is.

Implementing Automated Validation

While clear ownership and standards are fundamental, people will always make mistakes. Manual checks alone are not sufficient. This is where automated validation rules are crucial for maintaining data quality as you grow.

These are simple checks built directly into your software to catch common errors at the source. They act as a digital gatekeeper, stopping mistakes before they can contaminate your datasets. They do not need to be complicated to be effective.

Here are a few common examples I recommend to most clients:

Required Fields: Make essential information, like a client's ABN or a project's start date, mandatory. This eliminates blank fields where they matter most.

Format Constraints: Force phone numbers or postcodes into a consistent format.

Dropdown Menus: Use predefined lists for items like "Country" or "Lead Status" instead of allowing free text. This simple change eliminates typos and variations.

Implementing these rules in your existing software, such as your CRM or ERP, is often a low-cost yet high-impact initiative. It dramatically reduces manual cleanup, frees up your team's time, and systematically improves the reliability of your data for the long term.

Putting Practical Data Governance in Place

The term "data governance" can cause concern among business leaders, who may imagine more bureaucracy and slower progress. However, it does not have to be that way.

A light-touch approach to governance is a powerful way to keep your data clean and reliable without creating bottlenecks.

Create a Business-Led Data Council

The best starting point is to form a Data Council. This is a small team of senior leaders who are directly responsible for key business functions.

Your council should include the heads of departments like Sales, Operations, Finance, and Marketing. These individuals experience the negative effects of bad data daily. They understand the real-world impact and are well-positioned to make practical decisions on data standards.

This group would typically meet quarterly with a simple agenda:

Agree on clear data standards that work for all departments.

Resolve any cross-department data disagreements as they arise.

Prioritize data quality projects based on what will have the greatest business impact.

When business leaders are in charge, governance becomes a tool for more unified decisions instead of a roadblock.

A Data Council elevates data quality from an "IT problem" to a shared business responsibility. When department heads are accountable, standards are not just documented, they are implemented.

This model naturally encourages collaboration. It breaks down the silos that often create conflicting and messy information, fostering a unified approach to managing a critical company asset.

Essential Tools to Improve Data Quality

You have established data ownership and governance rules. This is a great start. But how do you maintain clean data over time? This is where technology is essential.

Manually checking every piece of data entering your systems is not practical or scalable. The right tools automate this process, acting as a constant monitor that identifies issues before they affect your critical reports and dashboards.

For business leaders, the goal is to understand the types of tools available and how they help achieve business goals. This approach shifts data quality management from a reactive exercise to an automated function that operates in the background.

Choosing the Right Tools for the Job

Do not seek a single piece of software that does everything. It does not exist. The smart approach is to strategically combine different types of tools to create a comprehensive safety net for your data.

The most effective data quality strategies use a combination of tools to profile, cleanse, and monitor data. This creates multiple layers of defense against small errors that can lead to flawed reports and poor business decisions.

A common mistake is investing in an expensive dashboarding tool before the underlying data is reliable. The better approach is to clean your data before it reaches a final report.

Key Categories of Data Quality Tools

For most mid-sized businesses, focusing on three core tool categories provides the most value. These tools work together to find existing problems, fix them efficiently, and prevent them from recurring.

Data Profiling Tools: These are your first line of defense. Profiling tools scan your databases and provide a clear picture of your data's current state. They are excellent at uncovering hidden issues like unexpected blank fields, inconsistent formatting, or duplicate records.

Data Cleansing Tools: After profiling identifies the problems, cleansing tools help you fix them in bulk. Instead of manually correcting thousands of records, these tools can standardize formats, merge duplicates, and correct common errors automatically based on rules you define.

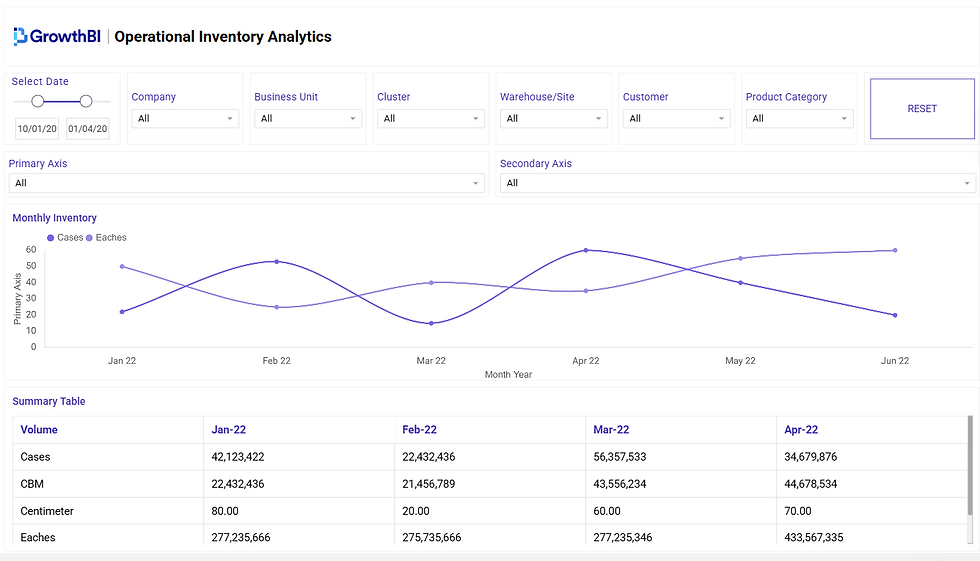

Monitoring and Alerting Dashboards: These are your ongoing health monitors. A good data quality dashboard tracks key metrics over time, such as the percentage of complete records or the number of validation errors per day. When a metric falls below a set threshold, it triggers an alert, notifying the appropriate person to investigate.

You can learn more about how automated report generation improves data-driven decisions in our other guide.

To help you get a clearer picture of how these tools fit together, here's a simple comparison.

Data Quality Tool Comparison for Mid-Sized Businesses

This table breaks down the different tool categories to help you understand what to look for based on your objectives.

Tool Category | Primary Function | Best For... |

Data Profiling | Discovering and analysing the current state of your data. | Understanding the scope of data issues before starting a cleanup project. |

Data Cleansing | Correcting, standardising, and removing erroneous data in bulk. | Fixing widespread, systemic issues found during profiling. |

Monitoring & Alerting | Tracking data quality metrics over time and flagging anomalies. | Maintaining data quality long-term and catching new issues as they arise. |

By thoughtfully combining these tools, you build a robust and automated system. This setup helps to keep the data feeding your strategic dashboards consistently accurate, complete, and trustworthy. Ultimately, it gives you the confidence to make important business decisions without doubt.

From Disagreement to Decisive Action

Consider a typical mid-sized retail company. Before addressing data quality, its weekly leadership meetings were unproductive. The Head of Sales would present a report showing strong growth, only for the Head of E-commerce to present different figures from their platform.

Significant time was wasted trying to determine which report was correct. Each leader defended their team's data, trust eroded, and crucial discussions about market trends were postponed. The root cause was a lack of standard definitions and processes for their data.

After implementing clear data governance and ownership, the dynamic changed.

They established a single, agreed-upon definition of a "sale" used across all systems.

Customer data was cleansed and de-duplicated, providing a single view of each buyer.

Automated reports were built to pull from a central data warehouse that everyone trusted.

The impact on their leadership meetings was significant. With one set of reliable figures, the team could focus on what mattered. Instead of debating sales numbers, they had productive conversations about underperforming product lines and how to reallocate the marketing budget.

The true value of clean data is in the quality of the conversations they generate. It replaces friction and doubt with focus and speed that allows your leadership team to perform at a higher level.

The Business Impact of Trusted Data

This clarity has a measurable effect on business performance. When everyone from operations to finance works from the same information, the entire organization becomes more agile and aligned.

An executive KPI dashboard brings together critical business and data quality metrics, giving leadership a single source of truth for confident, aligned decision-making.

Here’s how that translates into real-world outcomes:

More Confident Decisions: Leaders can act on opportunities without second-guessing the data. This speed is a significant competitive advantage.

Smarter Resource Allocation: You can confidently move budgets, staff, and inventory to the parts of the business with the highest potential return.

Improved Team Alignment: When every department sees the same reality, collaboration improves. Sales, marketing, and product teams can work together seamlessly because they share the same goals and metrics.

Investing resources in your data quality is a direct investment in your company’s collective intelligence. You can learn more about proving the value of your business intelligence initiatives in our other article. High-quality data is the foundation for solid strategy and lasting growth.

At GrowthBI, we build the data foundations that give you complete confidence in your numbers. Learn how GrowthBI can deliver the clarity your business needs.